Real-Time Automated Shark Detection System

Scout Aerial has been working on an automated shark detection algorithm for the past twelve months and this report details some of the results that were achieved during testing. Due to the innovative approach to this project, Scout Aerial needed a lot of sample data in order to build a baseline algorithm. Once we have identified the data requirements, and have developed a machine learning tool for this algorithm, we can then build a solution that is scalable and suited to the shark management process. By undertaking a pilot project, we are able to collect the data required, identify the particular environments (type and state), build a base algorithm and understand the scale requirements.

The main focus has been on training the algorithm which is an extremely manual process. A team of four pilots, two aircraft and supporting hardware and software was sent to test the real-time system.

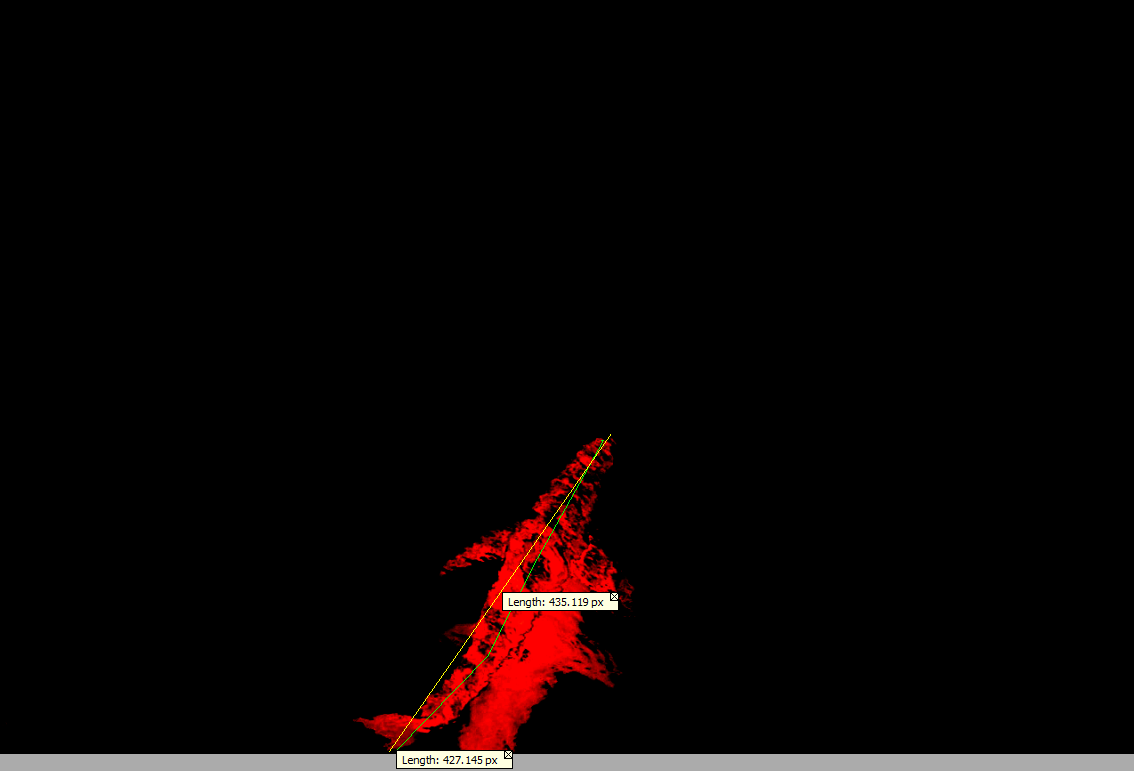

We also have been working on the hardware design so that it may be attached to any RPA and will be fit-for-purpose. The hardware can be offered in multiple configurations or “as-required” (multispectral, RGB, NIR,) and we will collect different data samples in order to “train” the base algorithm in “layers” to make it more accurate over time.

In regards to developing an algorithm, the required specification aids in the identification and reduction of subconscious biases. By using an algorithm, decision making becomes a more rational process.

In additional to making the process more rational, use of an algorithm will make the process more efficient and more consistent. Efficiency is an inherent result of the analysis and specification process. Consistency comes from both the use of the same specified process and increased skill in applying the process. An algorithm develops and becomes “self-learning” over time, meaning a constant improvement in efficiency. The very first step to developing an algorithm is the collection of data that will be used as the foundation to build it.

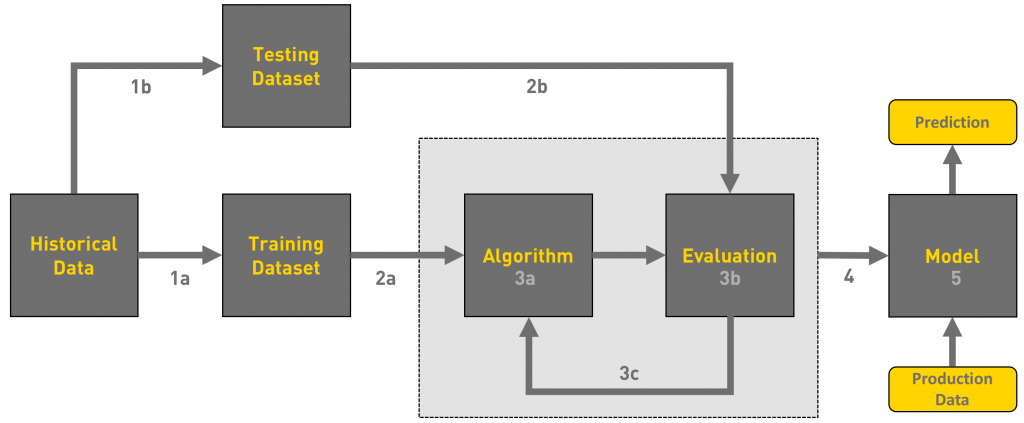

Step 1a: Select dataset for training, historical and actual

Step 1b: The smaller part of the dataset is considered for testing.

Step 2a: The training dataset is passed to an ML algorithm like Linear Regression.

Step 2b: The testing dataset is populated and kept ready for evaluating.

Step 3a: The algorithm is applied to each data point from the training dataset.

Step 3b: The parameters are then applied to the test dataset to compare the outcome.

Step 3c: This process is iterated till the algorithm generates values that are a close match to the test data.

Step 4: The final model is evolved with the right set of parameters tuned for the given dataset.

Step 5: The model is used in production to predict based on the new data points.

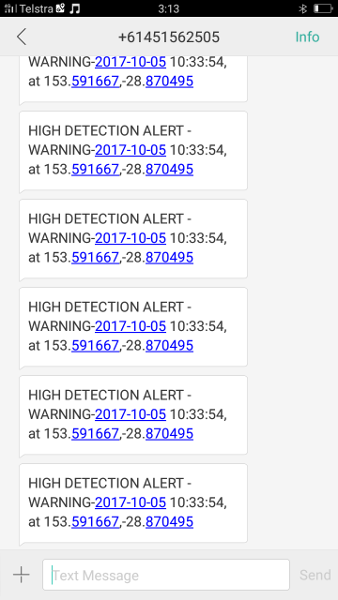

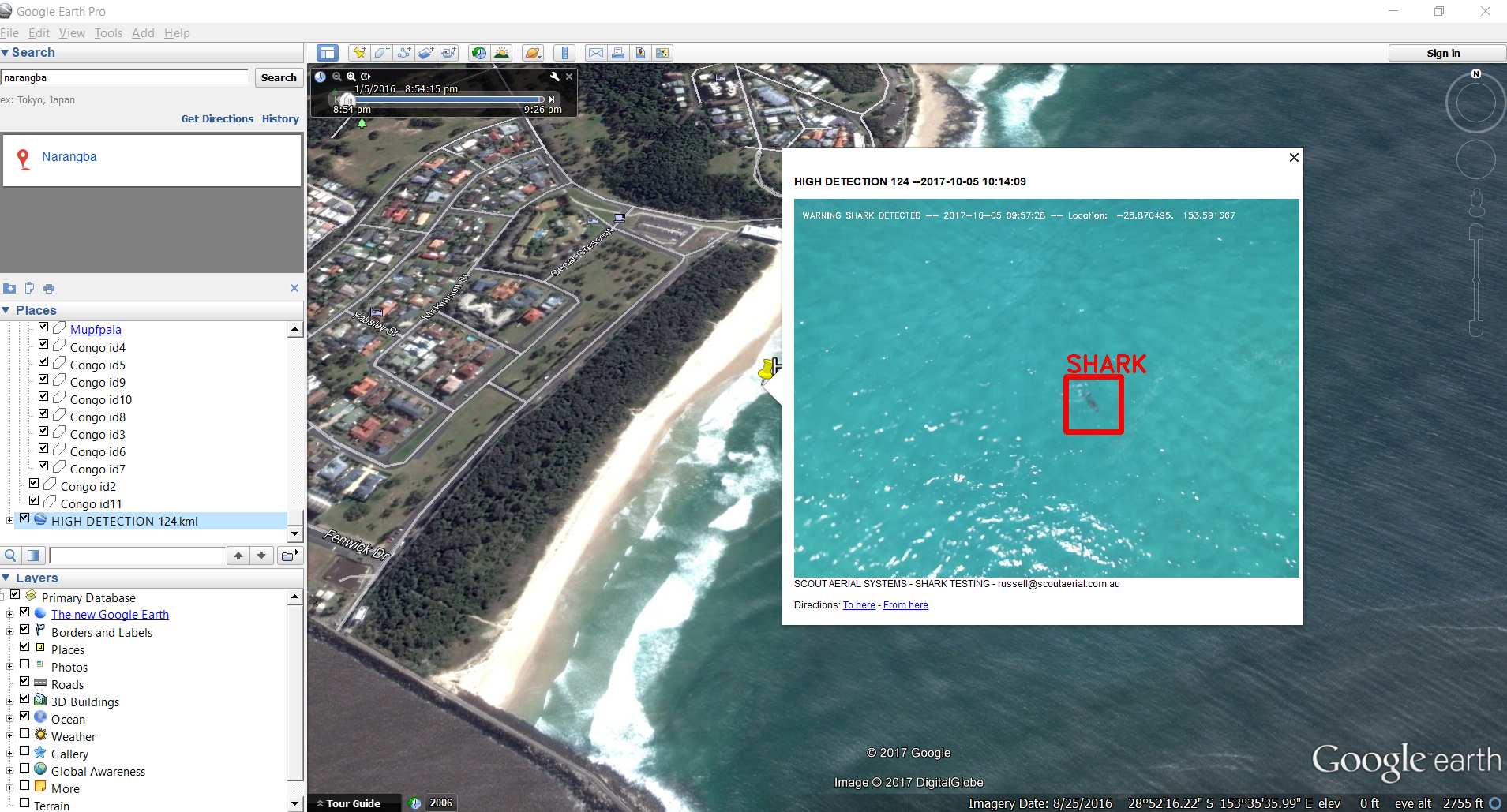

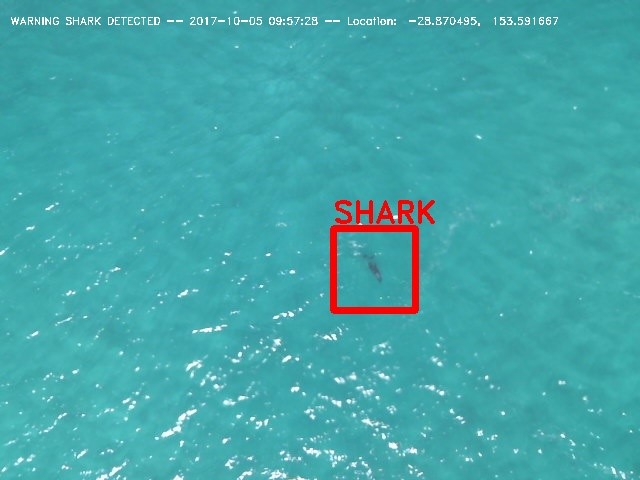

The data output can be in many forms. We have chosen SMS with an Alert and geolocation, this can be attached to a KML and opened immediately in Google Earth. This can easily be pushed into an App if required. See below an example:

Get in contact with us today if you are interested in getting an algorithm or computer vision system developed to reduce your workload. Once developed, these tools can increase your efficiency drastically. Using technology to create change for the better, get onboard!